Today on AI For Humans:

OpenAI’s Audio-First Device Is Coming

Head of Instagram Talks AI Slop

Plus, Hands-On With Opus 4.5!

Welcome to the AI For Humans newsletter!

Did you know that the same companies that spent 20 years gluing our faces to screens now want to free us from them?

This shift represents either the greatest act of tech-industry atonement since... well, ever, or perhaps the long-awaited gateway to a more natural, less intrusive way to interact with technology.

The big news this week is that OpenAI is going all in on audio (again).

The Information grabs a big scoop.

According to The Information, the company has unified several engineering, product, and research teams over the past two months specifically to overhaul its audio models.

The goal? A much better audio experience for the long awaited Jony Ive-designed audio-first personal device. Is it going to be a pen? Headphones? A puck you wear around your neck or something else?

Who knows. But it is coming.

Ive himself has said he wants to make reducing device addiction a priority and sees audio-first design as a chance to "right the wrongs" of past consumer gadgets. This def feels weird considering it's coming from one of the main people responsible for this addictive black rectangle in my hand right now.

But the bigger question to ask is: what does OpenAI have to gain from this?.

Why Audio, And Why Now?

Despite all its promise, no one has really cracked the best use case for AI audio yet.

And there's good reason for that.

First, you actually have to talk. We're used to talking to humans, not computers. And if you do it in public? People think you're weird.

Then there's the response problem. It has to feel real without trying to feel real. Do you get annoyed at the "um, oh yeah" stuff from OpenAI's Advanced Voice? That's them trying too hard. To be fair, no one has really nailed this.

The Future of AI in Marketing. Your Shortcut to Smarter, Faster Marketing.

Unlock a focused set of AI strategies built to streamline your work and maximize impact. This guide delivers the practical tactics and tools marketers need to start seeing results right away:

7 high-impact AI strategies to accelerate your marketing performance

Practical use cases for content creation, lead gen, and personalization

Expert insights into how top marketers are using AI today

A framework to evaluate and implement AI tools efficiently

Stay ahead of the curve with these top strategies AI helped develop for marketers, built for real-world results.

In our work on AndThen, we've found that grounding the AI in an actual personality helps. But an assistant that's just... you, extended? That's harder.

The real prize here is the one-on-one AI assistant with growing knowledge of your life. That's the killer product. But we're still missing the memory and the privacy infrastructure to make it work.

A device that listens to you all day is a step in that direction. And if OpenAI can pull it off, they cement their place in the AI stack.

Big if, though.

Will Any Of This Actually Work?

We've been here before.

The Humane AI Pin burned through hundreds of millions before becoming one of tech's most high-profile failures. The Friend pendant, a necklace that records your life and offers "companionship," has sparked equal parts privacy panic and existential dread.

And now there are at least two companies building AI rings, including one from Pebble co-founder Eric Migicovsky, that let you literally talk to your hand.

Love this newsletter? Forward it to one curious friend. They can join in one click.

The form factors keep changing. But the thesis remains the same: audio is the interface of the future. Your home, your car, your face, everything becomes a listening post.

To me, one of the most interesting things about this shift is what it means for how we interact with AI.

OpenAI's new audio model (slated for early 2026) is supposedly going to sound more natural, handle interruptions like an actual conversation partner, and even speak while you're talking.

That last part is huge. Current models can't do real conversational turn-taking. It's always call-and-response. This would be actual dialogue.

This would maybe finally be the version of Her that Sam teased so long ago.

For anyone who's worked with voice AI, this is the dream.

The real-time APIs right now are good, but they still feel like talking to a very smart answering machine. The models are getting better at not just understanding what you're saying but how you're saying it. The cadence, the interruptions, the half-finished thoughts that are just part of how humans actually communicate.

For now, I think the opportunity for creators is obvious. Audio experiences like podcasts, interactive voice games, AI companions, ambient storytelling... all of it is about to get a lot more interesting. The infrastructure is finally catching up to the imagination.

And if the big tech companies are right about where this is going, the screen might not be the center of our digital lives for much longer.

Whether that's liberation or just a more subtle prison, well... we're all about to find out.

That’s all for this week and we’ll be back on Thursday with a new episode!

-Gavin

3 Things To Know About AI Today

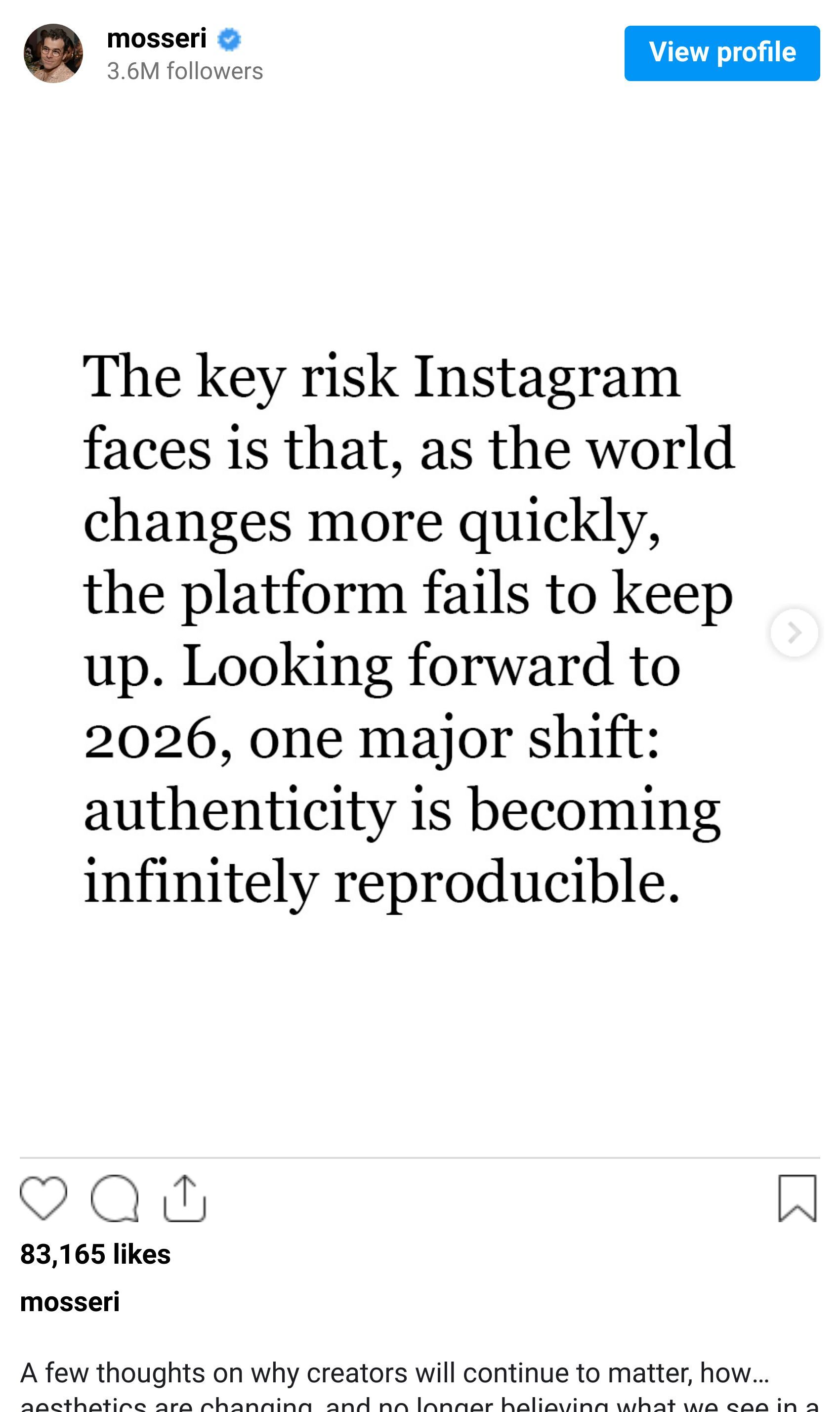

Instagram’s Adam Mosseri Talks AI Content

For those of you following the AI slop debate, we’ve got a new missive from Head of Instagram Adam Mosseri regarding how AI content will change not only that platform but all video content at large. And it’s a slideshow!

Some really useful takeaways here that I happen to agree with:

AI content is getting much better and it’s not all slop, it will be harder to determine what’s AI and what’s not, authenticity will only become more important for creators and, finally, lo-fi human aesthetics will start to become dominant across a lot of video platforms.

Many people have thoughts on this, but I appreciate what Adam is saying here. He’s seeing the platform he controls morph before his eyes and is trying to approach change head on.

Meta Acquires Manus In The Year of AI (M)agents

Over the holiday break you might’ve missed some pretty big news: Mark Zuckerberg went shopping and acquired Manus, the Chinese-founded AI agent start-up.

Mark’s Super Intelligence group at Meta continues to employ a roll-up strategy, meaning that instead of developing internal models for certain things, they’re buying a lot of start-ups and (we assume) looking to integrate all of them into one workflow soon.

If you’ve been following us for a bit, you’ll probably remember us covering Manus way back when they first appeared on the scene. It was one of the first companies to offer agents to go out and do stuff, something that a lot of the bigger labs now have products capable of.

Now, Manus is a more robust agentic AI platform that will probably fit nicely into Mark’s AI plans. But when do we get to see those plans? Who knows.

Unitree’s Weird Looking Humanoid Robot Shows Skills

We’ve talked about the footprint that Unitree Robotics has quite a bit, but the leading Chinese robot manufacturer isn’t slowing down.

Their new humanoid, the strangely tall and skinny H2 Robot, is remarkably good at fluid motion and, in the video below, shows off its ability to round-house kick balloons that are roughly the shape of human heads.

Remind me: Why are we making these again? 😆 👀 🤖

We 💛 This: Claude Opus 4.5 Is Very Good

We pride ourselves here on trying all the latest models and being able to tell you what’s good and what’s not so good but sometimes there is such a blast of new AI stuff that it’s hard to get to everything.

Over the holiday break, Claude Code and Anthropic’s latest model Opus 4.5 had a real rush of positive, organic press from the coding enthusiasts of the world and I realized that I had not spent nearly enough time trying it out myself.

Reader: It is SO good.

If you’re even remotely interested in how far you can push frontier LLMs, I suggest you go and give it a shot. You’ll have to sign up for one month of a Pro account ($20) in order to get access but it’s useful for a LOT, not just high end coding tasks.

I’m working on a novel right now (yes, I write weird stuff outside of this) and, while I’m pretty opposed to AI writing any actual words people read, it can be super useful for organizing thoughts and large files if the system doesn’t screw it up.

Mostly, they still screw it up.

Opus 4.5 is the first LLM system that does not. It was able to take in my large document (60k words), give me a beat-by-beat synopsis and then work with me to help restructure the chapters in a way to make the book more compelling. I was also able to use it to brainstorm potential ideas for character arcs and the like.

One big downside: The Pro plan gets eaten up fast. It kind of sucks that the $20 a month plan doesn’t get you a ton of Opus 4.5 outputs but it is what it is.

What’s even crazier is that 2026 will have even better AI models and it’s hard to even image what this world looks like going forward. Get ready y’all!

Are you a creative or brand looking to go deeper with AI?

Join our community of collaborative creators on the AI4H Discord

Get exclusive access to all things AI4H on our Patreon

If you’re an org, consider booking Kevin & Gavin for your next event!