Today on AI For Humans The Newsletter!

Kling 2.0 is here! It’s good… but expensive

OpenAI Rumored Plan To Launch a Social Network…Plus, GPT-4.1

NVIDIA’s new plan to make AI chips in Texas

Plus, our can’t-miss AI feature of the week!

Welcome back to the AI For Humans Newsletter!

So… how far are we really away from a fully generated AI movie?

It’s a question that Kevin & I have been asking ourselves for a while. In the last few weeks, we’ve taken some significant steps forward (and probably cut a few months off our timeline) with the launches of Runway Gen-4, Google’s Veo 2, new tools from Higgsfield AI and now, Kling 2.0.

Kling is the best AI video model on the market right now, full stop.

I’ve been doing a bunch of video gens for <secret project> and kept coming back to Kling’s last model, Kling 1.6. It does the best job of giving lifelike animations to subjects and the sorts of subtle camera movements that can add a ton to your AI video productions.

What does 2.0 add to the mix?

Overall upgraded shot consistency, better physics (specifically lots of animals running), prompt coherence (specifically camera shots) & more. They’ve also launched a new update their AI Image model Kolors as well.

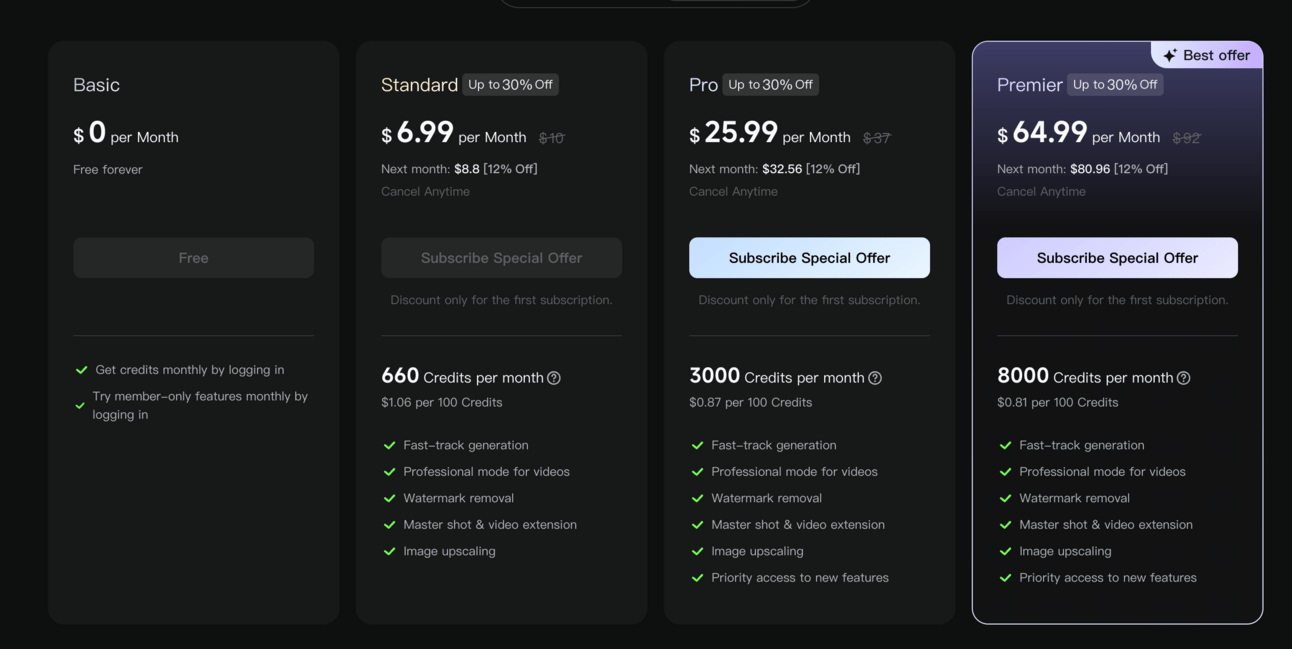

So if you’re an up-and-coming AI video filmmaker why wouldn’t you use Kling 2.0? Well, it’s expensive… 100 credits per 5 second output (up from 70 credits prior). Depending on what subscription level you’re on or if you’re buying credits, that’s a big price hike. But still, that’s significantly less than what Google is charging for VEO 2.

On the most expensive monthly plan, one 5-second gen will cost you $0.81

A slightly thornier issue: Kling is made by Kuaishou Technologies, a large Chinese tech firm, and if you have issues with how AI video models are trained then you may want to avoid it. The hard truth right now is that the Chinese video models (including Minimax) are some of the best and it’s more than likely these were trained on a massive corpus of films and TV shows. But it’s impossible to know because none of these companies (including the American ones) are opening their datasets for us to see.

We’re excited to dig in a bit more and push Kling to see what it does and, I guess, open our wallets to get a few more credits to put into the ol’ machine. Have I mentioned lately we have a Patreon tip jar for this exact reason? 😢

More on Thursday!

-Gavin (and Kevin)

3 Things To Know Today

Is OpenAI About To Launch a Social Network? Plus, GPT-4.1 Launches

Breaking news from The Verge’s Kylie Robison this AM: It sounds like OpenAI is thinking about a social network, specifically around images.

We actually think this is a great idea to help discoverability of image prompts and to get creators seen. The Sora home page is a bit of a mess right now. Could this also be how they pay creators eventually?

Also, OpenAI is widely expected to launch their o3 model at some point this week and just in time, as Google Gemini 2.5 Pro has taken the AI lead across MANY use cases like coding & deep research. But before that, they decided to GO BACKWARDS (kind of) and are releasing a new version of GPT-4o called GPT-4.1 but only for developers.

What is going on here? How many names can OpenAI really roll out? The basics: GPT-4.1 is significantly cheaper for developers, performs better than some reasoning models and in some cases, even beats GPT-4.5.

Google Created An AI To Talk To Dolphins

Have you ever wanted to talk to your dog? No, I’m not counting that one Tiktok dog who pushes the buttons. I mean really understand what they’re saying?

Well, Google DeepMind is hoping its work on DolphinGemma, a new AI designed specifically to understand what dolphins are saying, may get us to that dog-speaking universe.

NVIDIA’s Big Texas Move: AI Supercomputers Made in the USA

This has been quite the week in the world as your 401k can tell you. As markets are getting spooked about who’s going to place tariffs on whom, NVIDIA announced they're teaming up with Foxconn and Wistron to mass-produce AI supercomputers in Texas.

Why should you care? Well, bringing production stateside means more control, faster innovation, and fewer supply chain headaches. Is this the AI boom’s "made in America" moment? It might be the start of it.

We 💛 This - OpenAI’s 4o Image Gen Makes Pets into People

While OpenAI might be cracking down further on what we can and can’t do with 4o Image Gen (I was able to get this pretty fun vintage Barbie ad prompt to work), people are still discovering wild real world ways to push the technology.

This is my dog, Ollie. I also think he’s the second bassist in Phish.

Even though this prompt has gone fully mainstream (both of my daughters were doing it without any input on my part), it shows the insane power of what 4o Image Generation can accomplish. Not that long ago, you would’ve need a whole heck of a lot of weird ComfyUI spaghetti strings to pull something like this off and now, it’s as easy as asking ChatGPT. Just use the prompt in the image above and upload a photo of your pet.

Are you a creative or brand looking to go deeper with AI?

Join our community of collaborative creators on the AI4H Discord

Get exclusive access to all things AI4H on our Patreon

If you’re an org, consider booking Kevin & Gavin for your next event!