Today on AI For Humans:

AI Takeoff and YOU

Tiktok Getting SOTA AI Video

Plus, a (good) AI History Game!

Welcome to the AI For Humans newsletter!

I saw this from OpenAI/Sora researcher Gabriel Peterson and it made my skin crawl.

Not just in the ‘ugh, people who work at these AI labs need to start being a more aware of how they sound’ but in the ‘oh boy, he might be right’ way.

If you’re not steeped in years of AI lingo, you might just quickly scroll by that tweet, not giving it a second glance.

But in the inner circles of AI twitter (and, clearly, amongst the leading AI labs themselves) the conversation about the AI takeoff is very, very loud.

Let’s educate you on what this is and why it matters to you, the human.

Please support AI For Humans by learning about our sponsors below:

Want to get the most out of ChatGPT?

ChatGPT is a superpower if you know how to use it correctly.

Discover how HubSpot's guide to AI can elevate both your productivity and creativity to get more things done.

Learn to automate tasks, enhance decision-making, and foster innovation with the power of AI.

So…What Is AI Takeoff?

The simplest definition of AI takeoff is the moment (or period of time) where AI models begin to improve much faster than ever before, mostly because they can improve themselves.

This might sound somewhat charming (‘Oh, they’re working on themselves! How fun!’) but what matters here is recursive self-improvement and exponential growth.

Once the AI gets better at making itself better, that improvement compounds back upon itself again and again until it’s improving MUCH faster than before.

It’s a weird concept for us humans to grasp.

After all, we kind of have an upper limit to our ability to learn and grow.

One of the great tech explainers of our time, Tim Urban of Wait, But Why, wrote a blog post TEN YEARS AGO which contains a simple illustration that does more to explain this idea than reading 100 wikipedia pages.

In Tim’s illustration (especially the second part), you see how the moment before massive change can feel quite normal to us humans.

We’re sitting on the edge of it happening. Things feel like they always felt because we can’t see the changes coming our way.

The AI takeoff is the moment where this improvement begins and, for most of us, would be nearly impossible to see.

But Gabriel (and others inside the AI labs) are saying the quiet part out loud now.

For example: Anthropic just dropped a 2.5x faster version of Opus 4.6 days after announcing the new model.

The AI takeoff is happening. So now what?

Why AI Takeoff Matters And How The World Changes…

The thing about the Claude Code and OpenClaw moments is that both of them showed more of the human population to what these AI tools are capable of now.

And then, in last week’s release of OpenAI’s GPT-5.3 Codex model, we got the first official confirmation that one of these models actually worked on itself.

OpenAI's models now working on themselves!

It’s not hard to see where we’re headed.

Yes, these AIs are soon going to be much more capable than ever before and we’ll be turning more of our work over to them.

But AI takeoff is actually a bigger idea and one that’s kind of scary.

If AI takeoff happens. it’s not just about being aware of these tools and using them…

It’s about preparing yourself for an entirely different world.

Again, I’ll go back to Wait, But Why For another illustration…

Love this newsletter? Forward it to one curious friend. They can join in one click.

It’s not just about the idea of catching up…

It’s the idea that we will never catch up.

That there will be a huge gap, starting now, between the capabilities of humans and these AI systems and that gap will get wider and wider with each passing day.

A few days ago, I revisited the AI 2027 paper which I recommend y’all do as well.

Yes, it’s a science fiction look at the way the world could go (and maybe a little too doomer-ish) but it does paint a clear picutre of what an AI takeoff looks like and how our lives could change over that time.

Ok, I’m Freaked Out Now…What The Hell Do You Want Me To Do?

Sorry! I’m not trying to scare y’all but just bring some sense to the idea of AI takeoff and what it might mean in the next few years.

After all, as I said at the top of this, that tweet kind of freaked me out as well.

One thing I keep coming back to is what it’s going to mean to be human in a world of Artificial Super Intelligence.

Unlike some people, I really want to think that we can achieve some level of balance with the machines and there’ll be a place for humans in this world.

Things that I believe will always matter and you should personally work on during this time:

Creativity: Do not underestimate your ability to engage your personal creativity, even if your day-to-day job doesn’t involve it right now. Novel creative thought will be something that humans will be good at for a long time and will still provide serious economic value.

Relationships To Other Humans: We crave human-to-human connection and just because AIs can do a lot of our work, doesn’t mean we’ll want them to talk to people. Think about how to improve your connections with your fellow person and ways you might be able to help them (personally or professionally). AI Agents, while charming, aren’t human and will never be.

Entrepreneurship: What can you do to become an owner of something you create or have a hand in? Even if an AI ends up copying what you made, there’s a world that just the act of making in the first place will provide remarkable creative and economic value for you. In fact, I’ve begun to think that original systems or workflows from humans that feed AIs could be the next big thing.

It’s not a perfect list but it’s what I’m working on myself.

I’d love to hear your thoughts as well. Shoot me an email at gavin AT gavinpurcell dot com. Maybe I’ll collect some of the best responses and replies in the next newsletter.

See you next Friday for a new episode!

-Gavin

This week’s AI For Humans: Updates From OpenAI (GPT-5.3) & Anthropic (Opus 4.6) 👇

3 Things To Know About AI Today

Seedance 2.0: Bytedance Enters SOTA AI Video Conversation

Tiktok parent company (now minority shareholder of the American TikTok) Bytedance has been cooking behind the scenes.

They’ve always had decent AI image and video models but the leaks from the new one are very good.

While there are no ‘official’ leaks and most of these videos are being shared from people who are scraping whatever examples they see off of Chinese social media, the results speak for themselves.

After Kling 3.0’s big launch last week and this, I can’t wait to see what will come from the next VEO and Sora models.

Romance Writer Using Claude To Write 200 Books In One Year

As a writer (both of fiction and non-fiction), I have an uneasy feeling about using AI to write books for humans to read.

I’m a believer in using AI for structure but I feel like the words should come from me.

But as a reader, I’m now wondering… have I been reading AI writing already?

The NY Times reported this weekend on the work of Coral Hart, a real romance novelist, and how she used AI to go from 8 to 10 romance novels a year to over 200.

My biggest question as a fiction reader: Are those novels enjoyable? Do people actually care? Is Romance writing really that formulaic?

But larger question I have as a writer:

If I don’t do this, do I get buried by the avalanche of others doing it?

This is going to be one of the biggest questions for creatives at large. And probably does point to some sort of ‘human writing only’ branding in the near future.

As for Coral Hart, she’s also teaching people how to do this… which is rapidly becoming the financial end game for all creatives.

AI Fail: Winter Olympics Shoot Themselves In The Foot

The capabilities of AI video are remarkable and we’ve said that “AI slop” isn’t about the tool it’s about the intention and creativity put into the work.

That’s why we’re disappointed to see the Olympics use this low quality AI video montage of White Lotus star Sabrina Impacciatore skiing through the old logos.

Using AI in ways that make it look bad? On a huge, worldwide stage?

Lotta angry artists. Big surprise.

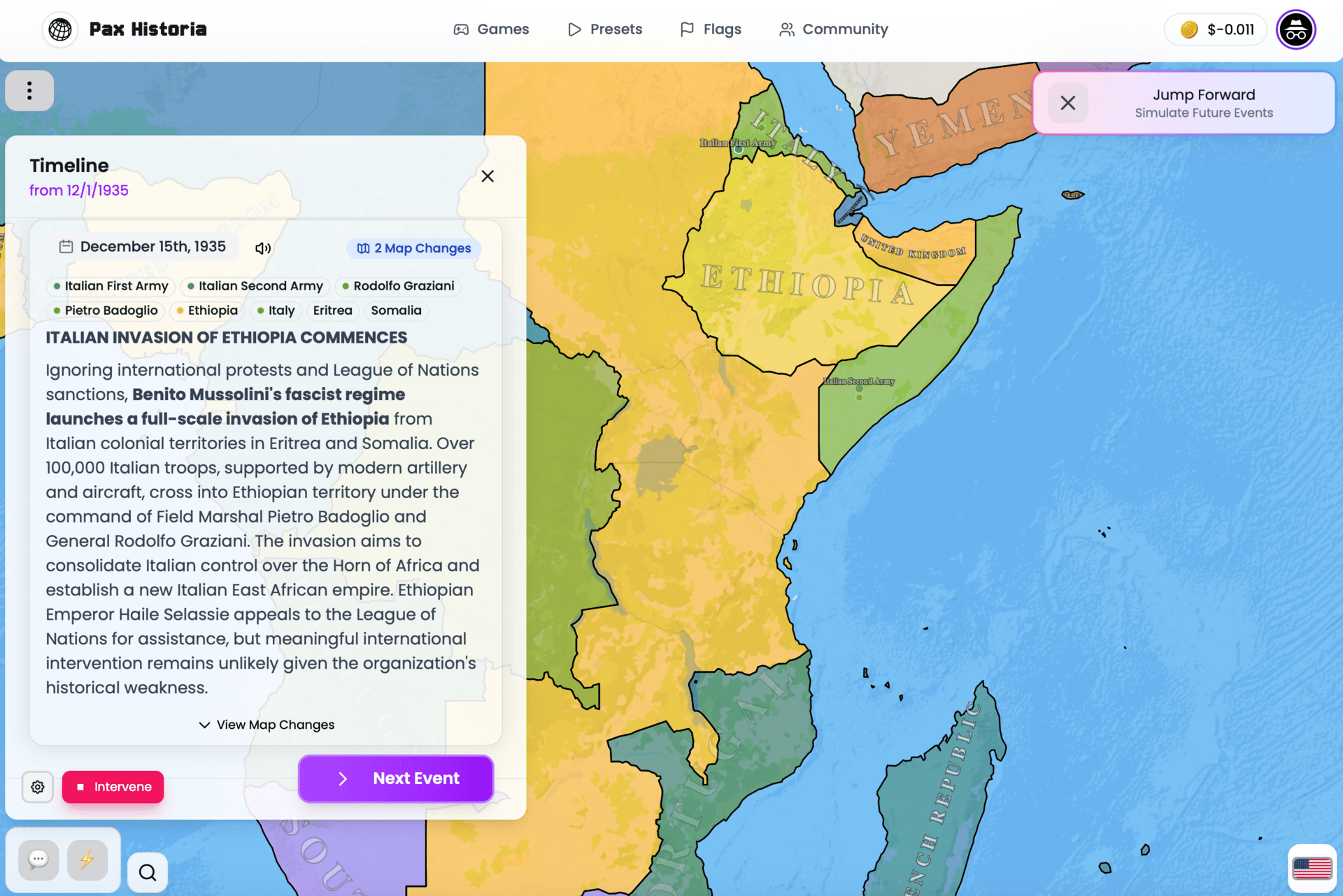

We 💛 This: Pax Historia AI History Game

I was (and still am) a big board game nerd. I love Settlers of Catan and have an endless need to discover more (please send me good ones).

Axis & Allies was an early favorite in my life. It’s essentially a more detailed and larger form of Risk, letting you re-create and re-play WWII from either side.

If that was your jam too, you’re gonna love the new AI game Pax Historia.

It’s a very smart implementation of LLMs into a strategy harness, letting you use natural language to play through either historical events or modern scenarios.

The only downside? Like all modern AI tools, it ain’t a one-time payment.

Because it’s calling LLMs, you’ll need to pay to play but it seems like lots of people are enjoying it enough to do so. You can try it for free and see if you agree.

Are you a creative or brand looking to go deeper with AI?

Join our community of collaborative creators on the AI4H Discord

Get exclusive access to all things AI4H on our Patreon

If you’re an org, consider booking Kevin & Gavin for your next event!