Today on AI For Humans The Newsletter!

Why this new Chinese AI model is VERY big deal

It happened, an autonomous agent from OpenAI

A fantastic new AI video model from Pika

Plus, our can’t-miss AI feature of the week!

Welcome back to the AI For Humans Newsletter!

You’ve now likely heard about Deepseek’s R1 model and how it tanked the US stock market on Monday. There’s a number of reasons why this happened (and some pretty fascinating conspiracy theories out there) but it’s clear this has had a major impact on both the AI space & the mainstream user. The model is VERY good and compares well to OpenAI’s o1.

On the show this week we’ll specifically get into why everyone is freaking out (China isn’t far behind the US AI labs, potential overspending on AI investment, NVIDIA’s cutting edge tech might not be needed as much as we thought) but I want to zero in on something I don’t think enough people are talking about:

Deepseek’s R1 lets you see how the model is thinking in real time

You get to see how the AI actually thinks.

Go give it a try right now and you can see, step-by-step, how it approaches your query. Unlike OpenAI’s o1 model which hides it’s ‘chain-of-thought’, Deepseek just types out everything it’s thinking. There’s something odd and eerie about seeing the machine brain work around what you ask it. It makes it feel a lot less random, and honestly, a lot more human.

For a lot of people (and I mean a LOT of people - it’s the number one app in Apple’s app store right now), this is the first time they’ve ever seen an AI’s thought process. And because it’s free to use, it’s likely the most advanced AI any of them have interacted with.

This transparency not only enhances user understanding but also makes the AI feel more like an active reasoning partner rather than a black box spitting out answers. As more people engage with R1 and witness its step-by-step thought process, it shifts the perception of AI from a tool that simply generates responses to something that actively "thinks" through problems in a way that feels eerily human.

And that’s why this moment is bigger than just another AI release. For the first time, the average person can see how an AI reasons in real time—and it’s good. Maybe too good.

When people truly grasp that AI isn’t just a magic answer box but a structured intelligence that operates in a way that feels familiar, it brings us one step closer to realizing just how fast this technology is advancing.

Lots to think about. See you Thursday!

-Kevin & Gavin

3 Things To Know

OpenAI Launches An Autonomous AI Agent 👀

Dubbed Operator, the tool navigates a built-in browser to do your dibbing. Still very early days, but it’s obviously the holy grail of AI applications. The launch demo nicely illustrates just how… beta it is. It’s only available to $200 Pro subscribers for now, and for most applications probably isn’t good enough to justify that price tag. But the day it gets good, well, things will change!

Don’t Sleep On This Model from Google(!)

While Deepseek has grabbed all the headlines lately, Google’s Gemini 2 Flash Thinking (facepalm) is a super impressive model that surpasses both o1 and R1 in many ways. The best part is you can try it for free here. And a nice little party trick: it comes with a 1 million token context limit, meaning it can remember more than 1,000 pages of information at a time.

Pika’s New 2.1 Model Is Live

Nothing beats the sweet smell of new creative tools in the morning. With Pika 2.1 you get 1080p resolution from Pika for the first time, and more lifelike humans. It also comes with “Ingredients”, launched in their 2.0 model, the ability to provide images of a scene, a character, clothing, even props, and get the model to put them all together into a video.

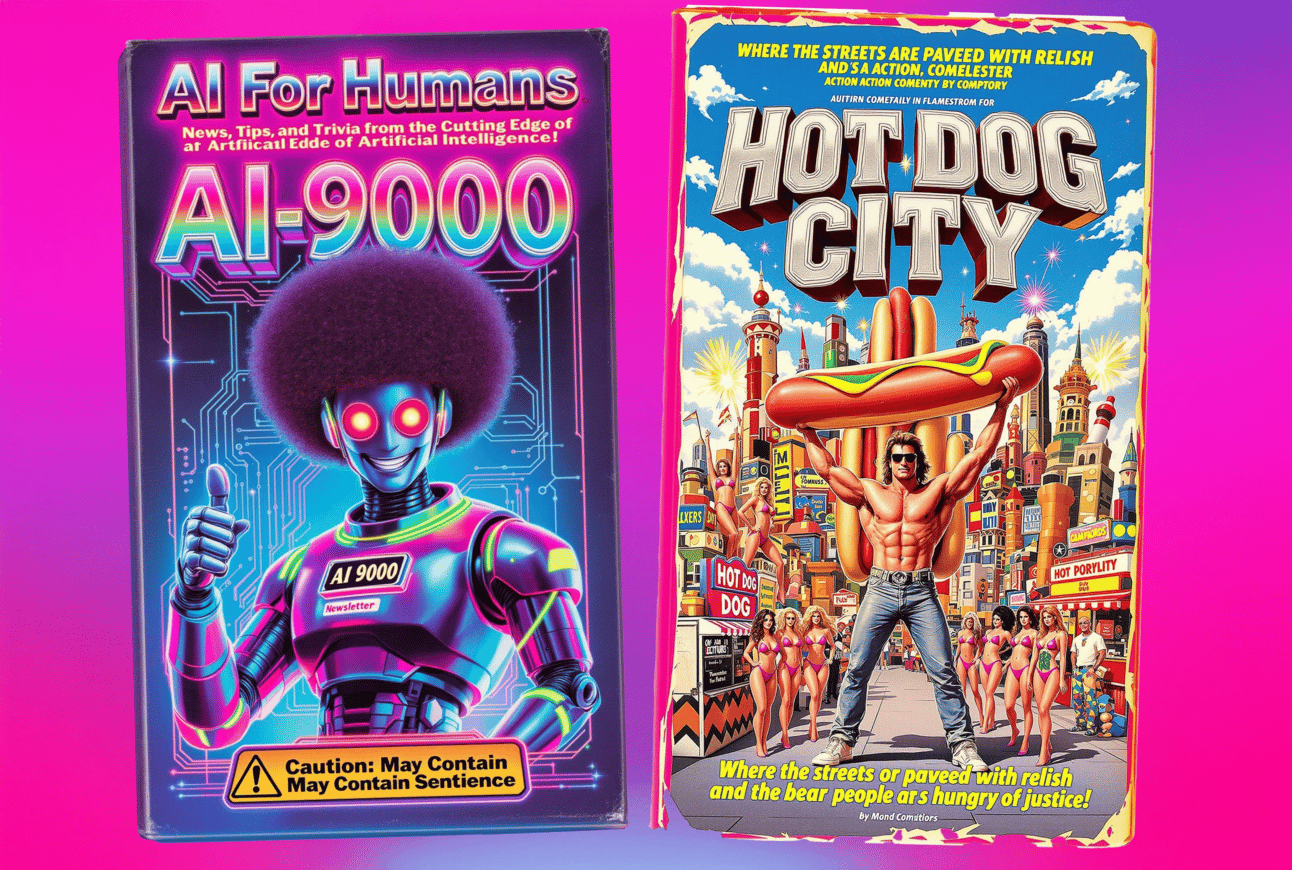

We 💛 This - Glif VHS Tape Creator

Glif’s a fun way to generate free silly images (for free), like this retro VHS tape creator.

It isn’t all fun and games though. Glifs are custom built workflows to generate images of any kind. It’s a gateway drug peek into more complex image generation workflows, and you’re able to see the blueprint of any workflow on the site.

The VHS generator for example is made up of 4 steps:

Take an input from the user - What do you want a video about?

Combine their input with the text A VHS tape cover from 1980s to early 2000s

Use llama3-70b to expand on the description, adding greater detail

And generate the image with the prompt, using the Flux Pro v1.1 Ultra model

Are you a creative or brand looking to go deeper with AI?

Join our community of collaborative creators on the AI4H Discord

Get exclusive access to all things AI4H on our Patreon

If you’re an org, consider booking Kevin & Gavin for your next event!